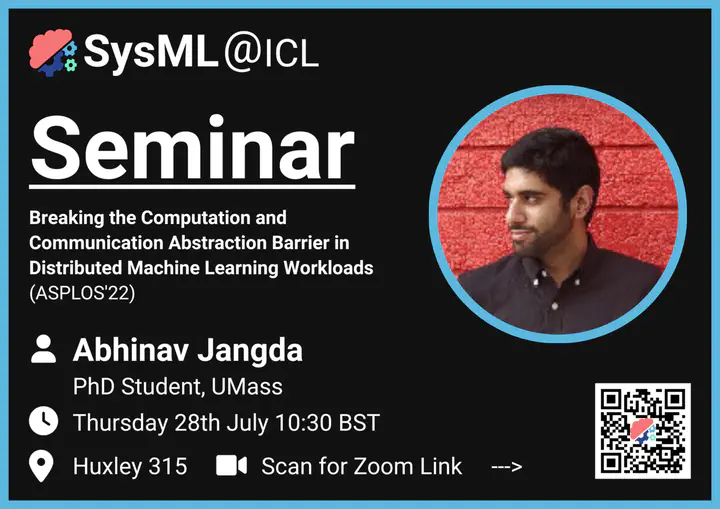

Seminar #3 - Abhinav Jangda - CoCoNet (ASPLOS'22)

Abstract

Recent trends towards large machine learning models require both training and inference tasks to be distributed. Considering the huge cost of training these models, it is imperative to unlock optimizations in computation and communication to obtain best performance. However, the current logical separation between computation and communication kernels in machine learning frameworks misses optimization opportunities across this barrier. Breaking this abstraction can provide many optimizations to improve the performance of distributed workloads. However, manually applying these optimizations requires modifying the underlying computation and communication libraries for each scenario, which is both time consuming and error-prone.

In this talk, I will present CoCoNet, which is a domain specific language to express a distributed machine learning program in the form of computation and communication operations. CoCoNet provides several a set of semantics preserving transformations that jointly optimizes communication and computation workloads. I will also present how we used CoCoNet to significantly optimize data- , model- and pipeline-parallel workloads in large language models with only a few lines of code.

Speaker Bio

Abhinav Jangda is a PhD student at the University of Massachusetts Amherst and will join Microsoft Research in Fall 2022. His research focuses on developing programming language abstractions and compilation techniques to help programmers leverage large scale systems efficiently. His work was invited for an article in USENIX :login;, has received an ACM SIGPLAN Distinguished Paper Award at OOPSLA, and a Best Paper Award at PACT.