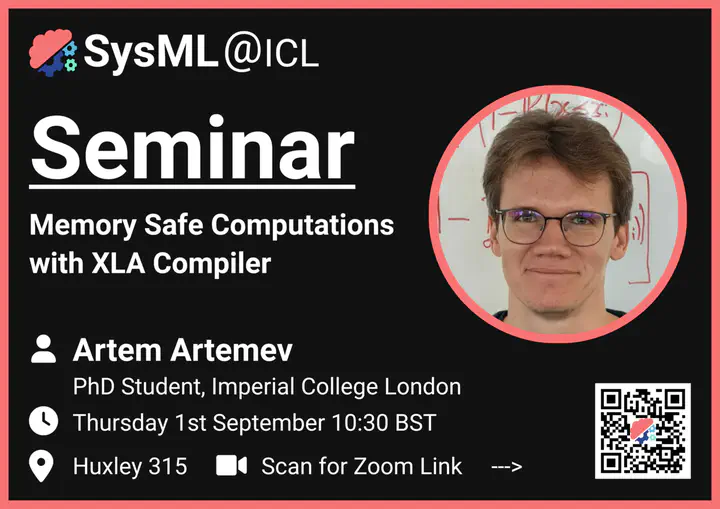

Seminar #4 - Artem Artemev - Memory Safe Computations with XLA Compiler

Abstract

Software packages like TensorFlow and PyTorch are designed to support linear algebra operations, and their speed and usability determine their success. However, by prioritising speed, they often neglect memory requirements.

As a consequence, the implementations of memory-intensive algorithms that are convenient in terms of software design can often not be run for large problems due to memory overflows. Memory- efficient solutions require complex programming approaches with significant logic outside the computational framework. This impairs the adoption and use of such algorithms. To address this, we developed an XLA compiler extension that adjusts the computational dataflow representation of an algorithm according to a user-specified memory limit.

We show that k-nearest neighbour and sparse Gaussian process regression methods can be run at a much larger scale on a single device, where standard implementations would have failed. Our approach leads to better use of hardware resources. We believe that further focus on removing memory constraints at a compiler level will widen the range of machine learning methods that can be developed in the future.

Speaker Bio

Artem Artemev is at his second year PhD research in Imperial College London, with Mark van der Wilk as his supervisor. Before starting PhD, Artem has worked as Machine Learning Researcher and Engineer for start-up companies. His primary interest lies in machine learning theory and approximate Bayesian inference, particularly non-parametric machine learning methods like Gaussian processes. His recent work addresses the scalability of Gaussian processes while retaining the predictive properties of that model when approximations are involved.