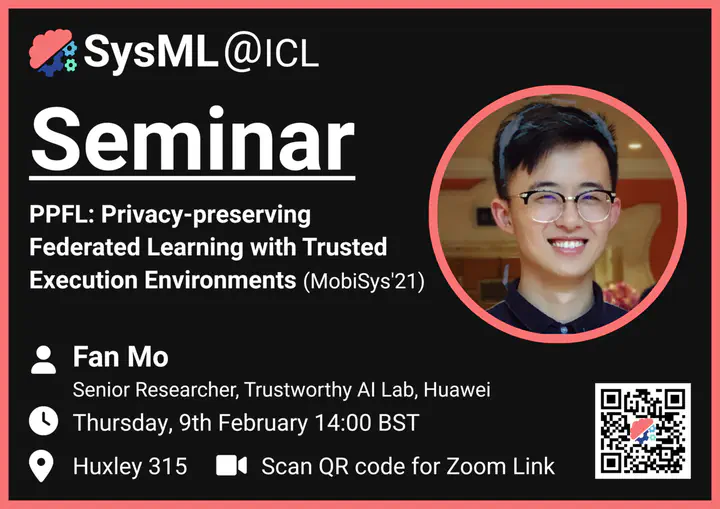

Seminar #7 - Fan Mo - PPFL (MobiSys'21)

Abstract

Although user data are not collected at a centralized location in federated learning (FL), adversaries can still execute various types of privacy attacks to retrieve sensitive information from the FL model parameters themselves, thus breaking the initial privacy promises behind FL.

PPFL, a practical, privacy-preserving federated learning framework, is proposed to protect clients’ private information against known privacy-related attacks. PPFL adopts greedy layer-wise FL training and updates layers always inside Trusted Execution Environments (TEEs) at both server and clients. We implemented PPFL with mobile-like TEE (i.e., TrustZone) and server-like TEE (i.e., Intel SGX) and empirically tested its performance. For the first time, we showed the possibility of fully guaranteeing privacy and achieving comparable ML model utility with regular end-to-end FL, without significant communication and system overhead.

Speaker Bio

Fan Mo is a Senior Researcher at Trustworthy AI Lab, Huawei. Before that, he completed his PhD at Imperial College London in 2022, advised by Professor Hamed Haddadi. He also worked for a short time in Nokia Bell Labs, Arm Research, and Telefónica Research. His research focuses on data privacy and trustworthiness in machine learning at the edge, machine learning in confidential computing, and distributed machine learning on lightweight mobile/IoT devices.