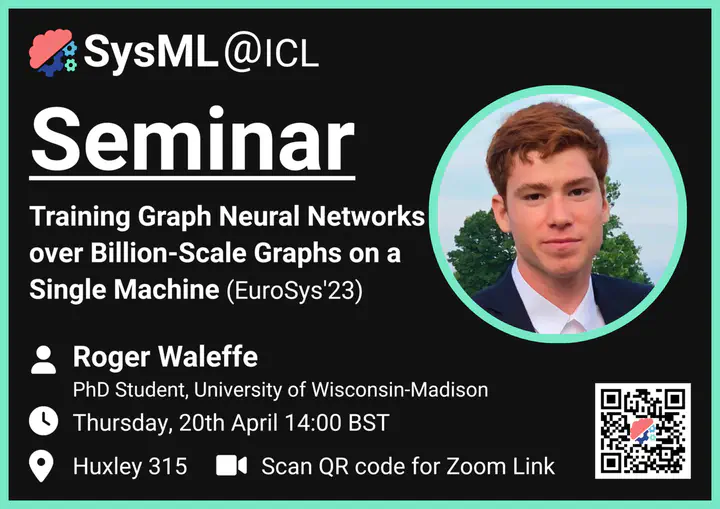

Seminar #8 - Roger Waleffe - MariusGNN (EuroSys'23)

Abstract

In this talk, I will present MariusGNN, a system for training Graph Neural Networks (GNNs) on a single machine. Using only one GPU, a machine with 60GB of RAM, and a large SSD, MariusGNN can learn vector representations for all 3.5B nodes (web pages) in the Common Crawl 2012 hyperlink graph containing 128B edges (hyperlinks between pages).

To support such billion-scale graphs, MariusGNN utilizes pipelined mini-batch training and the entire memory hierarchy, including disk. This architecture requires MariusGNN to address two main challenges. First, MariusGNN optimizes GNN mini-batch preparation to make it as efficient as possible on a machine with fixed resources. Second, MariusGNN employs techniques to utilize disk storage during training without bottlenecking throughput or hurting model accuracy. This talk will highlight how MariusGNN with one GPU can achieve the same level of model accuracy up to 8x faster than existing industrial systems when they are using up to eight GPUs. By scaling training using disk storage, MariusGNN deployments on billion-scale graphs are up to 64x cheaper in monetary cost than those of the competing systems.

Speaker Bio

Roger Waleffe is a PhD student at the University of Wisconsin-Madison working under the supervision of Theodoros Rekatsinas. His work focuses on the intersection of systems and algorithmic challenges for IO-aware training of large-scale ML models. Roger is a lead in the Marius project (https://marius-project.org/) which aims to make the use of Graph Neural Networks and Graph Embeddings over billion-scale graphs easier, faster, and cheaper. Roger holds a B.S. and M.S. in computer science from UW-Madison and is a recipient of the UW-Madison departmental graduate research fellowship and Goldwater scholarship.